Multi-armed Bandits

A k-armed Bandit Problem

In our -armed bandit problem, each of the actions has an expected or mean reward given that that action is selected; let us call this the value of that action. We denote the action selected on time step as , and the corresponding reward as . The value then of an arbitrary action , denoted , is the expected reward given that a is selected:

is not known, so we need to estimate it. We denote the estimated value of action a at time step as . We would like to be close to .

Sample Average Method

By the law of large numbers, converges to .

Greedy Action

The simplest action selection rule is to select one of the actions with the highest estimated value, that is, one of the greedy actions.

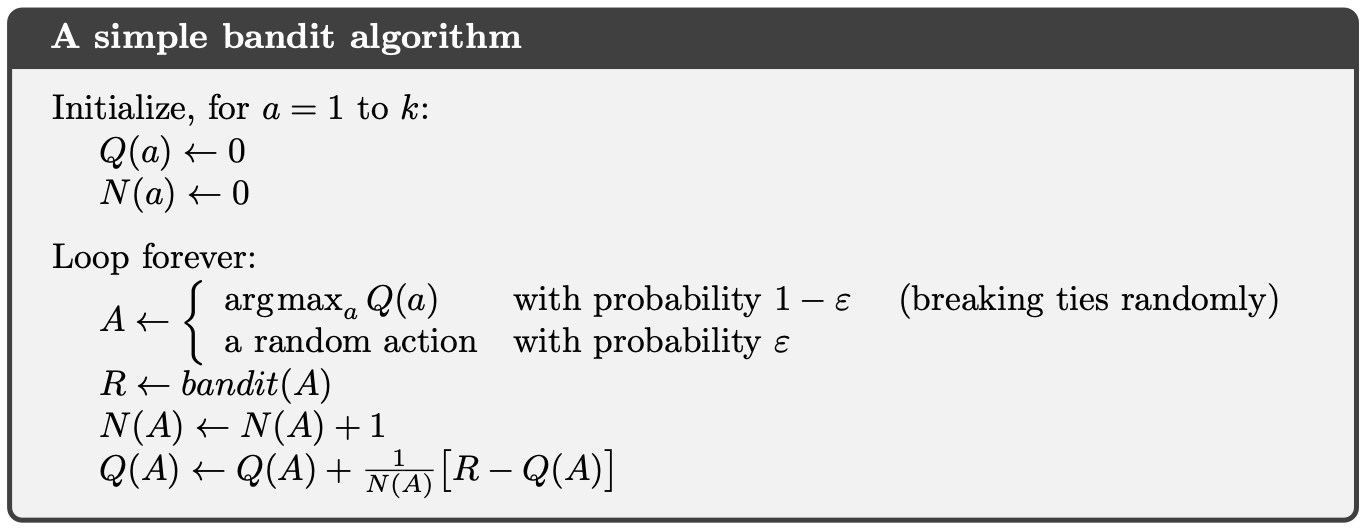

-Greedy Method

Greedy action selection always exploits current knowledge to maximize immediate reward; it spends no time at all sampling apparently inferior actions to see if they might really be better. A simple alternative is to behave greedily most of the time, but every once in a while, say with small probability , instead select randomly from among all the actions with equal probability, independently of the action-value estimates.

Incremental Implementaton

The action-value methods we have discussed so far all estimate action values as sample averages of observed rewards. We now turn to the question of how these averages can be computed in a computationally efficient manner, in particular, with constant memory and constant per-time-step computation.

Let denote the estimate of its action value after it has been selected times, which we can now write simply as

Given and the th reward, , the new average of all rewards can be computed by

The general form is

Pseudocode

My Code

Coming soon

Tracking a Nonstationary Problem

As noted earlier, we often encounter reinforcement learning problems that are effectively nonstationary. In such cases, it makes sense to give more weight to recent rewards than to long-past rewards.

The first term tells us that the contribution decreases exponentially with time. The second term tells us the rewards further back in time contribute exponentially less to the sum. Taken together, we see that the influence of our initialization of goes to zero with more and more data. The most recent rewards contribute most to our current estimate.

Optimistic Initial Values

Previously the initial estimated values were assumed to be 0, which is not necessarily optimistic. If we set it to a higher value, by the property of greedy action, the initial reward may not be as big as the initial estimated value. Therefore, it will keep exploring at an early stage.

But, this method is not well-suited for non-stationary problems, and we may not know what the optimistic initial value should be.

Upper-Confidence-Bound (UCB) Action Selection

Exploration is needed because there is always uncertainty about the accuracy of the action-value estimates. It would be better to select among the non-greedy actions according to their potential for actually being optimal, taking into account both how close their estimates are to being maximal and the uncertainties in those estimates. One effective way of doing this is to select actions according to

The idea is that the square-root term is a measure of the uncertainty or variance in the estimate of ’s value. The quantity being maxed over is thus a sort of upper bound on the possible true value of action , with determining the confidence level. Each time is selected the uncertainty is presumably reduced: increments, and, as it appears in the denominator, the uncertainty term decreases. On the other hand, each time an action other than a is selected, increases but does not; because t appears in the numerator, the uncertainty estimate increases. The use of the natural logarithm means that the increases get smaller over time, but are unbounded; all actions will eventually be selected, but actions with lower value estimates, or that have already been selected frequently, will be selected with decreasing frequency over time.